SELECT itemid, Qty, actualPrice, subtotal FROM SalesOrderDetail s WHERE actualPrice< 1000 COMPUTE SUM(Qty), SUM(subtotal)

Labels

- AVG() Function (1)

- Aggregate Functions (1)

- Candidate Key (1)

- Composite and Compound Keys (1)

- EXISTS (1)

- FIRST() Function (1)

- Group By Statement (1)

- Having Clause (1)

- INSERT (1)

- LIKE (1)

- SELECT AS CLAUSE (1)

- SQL Distinct Unique Records (1)

- SQL IN (1)

- SQL Joins (1)

- SQL Last() Function (1)

- SQL NULL Conditional (1)

- SQL Not Exists (1)

- SQLComparison Operators (1)

- Sql Max() Function (1)

- Sql Min() Function (1)

- Sql SUM() Function (1)

- WHERE (1)

Blog Archive

-

▼

2010

(73)

-

►

May

(40)

- Generate Mapping against target Step

- Pentaho Spoon Installation

- Group by SQL Query

- How to Insert Extracted Values in Pentaho Spoon

- Loading XML Data Using Pentaho ETL Tool

- SQL Query For Monthly Calculation

- Concatenation in Pentaho Kettle

- How to Split a String in pentaho Kettle

- Date to String Conversion in Pentaho Kettle

- How to get Distinct Count in Pentaho

- How to Write ETL Script in Pentaho

- Check Table Column Existence in Pentaho

- Performing Calculations in Pentaho Spoon

- Aggregate Functions in Pentaho Spoon

- What is Checksum in Pentaho Spoon

- Hex to integer Conversion in Kettle

- How to Set Environment Variables in Kettle

- What is Get Variable in Pentaho Kettle

- What is Set Variable in Pentaho Kettle

- Storing Transformations and Jobs in local Files Sy...

- What is Pentaho

- ETL Scripting with Open Source ETL Tools

- SELECT AS CLAUSE

- Calculations in SQL Query

- SQL Distinct Unique Records

- SQL IN, NOT IN and NOT BETWEEN Clauses

- SQL Between Clause

- SQL Multiple Conditional Queries

- SQL NULL Conditional Queries

- SQL Comparison Operators

- Candidate Key

- Super Key

- Composite & Compound keys

- Foreign key

- Alternate or Secondary Key

- Surrogate Key

-

►

May

(40)

Wednesday, July 21, 2010

Calculate Grand Total in SQL Query

at

10:09 AM

Posted by

M.Junaid Khan

at

10:09 AM

Posted by

M.Junaid Khan

0

comments

0

comments

Wednesday, June 23, 2010

Date wise SQL Query

at

8:54 AM

Posted by

M.Junaid Khan

at

8:54 AM

Posted by

M.Junaid Khan

0

comments

0

comments

Select employee_id, employee_name (Select count(*) from employee_leave el where e.employee_id=el.employee_id and el.leave_date between e.hire_Date and '2010-06-20 23:59:59') from tbl_employee e where e.hire_date between '2010-06-01 00:00:00' and '2010-06-01 23:59:59'here in the above query for extracting the total leaves the final date has been set from the employee hire date.

Tuesday, May 25, 2010

Generate Mapping against target Step

at

11:20 AM

Posted by

M.Junaid Khan

at

11:20 AM

Posted by

M.Junaid Khan

0

comments

0

comments

Pentaho Spoon Installation

at

10:58 AM

Posted by

M.Junaid Khan

at

10:58 AM

Posted by

M.Junaid Khan

1 comments

1 comments

chmod +x *.sh

Spoon.bat: launch Spoon on the Windows platform.

sh spoon.sh: launch Spoon on a Unixlike

platform: Linux, Apple OSX, Solaris, ...

If you want to make a shortcut under the Windows platform an icon is provided: “spoon.ico” to set the correct icon. Simply point the shortcut to the Spoon.bat file.

Supported platforms

- The Spoon GUI is supported on the following platforms:

- Microsoft Windows: all platforms since Windows 95, including Vista

- Linux GTK: on i386 and x86_64 processors

- Apple's OSX: works both on PowerPC and Intel machines

- Solaris: using a Motif interface (GTK optional)

- AIX: using a Motif interface

- HPUX:

Friday, May 21, 2010

Group by SQL Query

at

9:36 AM

Posted by

M.Junaid Khan

at

9:36 AM

Posted by

M.Junaid Khan

0

comments

0

comments

Select c.country_name, count(s.id) from tbl_sales s inner join tbl_country c on s.country_id=c.country_id where s.saels_date between '2010-05-01 00:00:00' and '2010-05-31 23:59:59' group by c.coutnry_name

Sunday, May 16, 2010

How to Insert Extracted Values in Pentaho Spoon

at

11:47 AM

Posted by

M.Junaid Khan

at

11:47 AM

Posted by

M.Junaid Khan

0

comments

0

comments

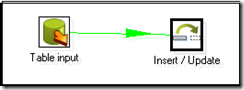

To insert the extracted records from the transactional source database into the destination or data warehouse following is simple way to do it.

Open the Pentaho Spoon go to file menu and open new Transformation.

in the design mode drill down the Input Folder and drag & drop the Table Input Step, double click the Table Input and set the source database (How to Set Connection Parameters in Pentaho Spoon)connection parameters this will extract all required fields

Again go to the design mode and this time drag & drop the INERT / UPDATE Step lying under the OUT PUT Folder now hold the Shift key and place your mouse icon over the TABLE INPUT and drag upto the INERT / UPDATE Step this will generate a Green color Arrow called a hope with its tail at the TABLE INPUT and head towards INERT / UPDATE Step.

You can enable, disable or even delete the hope by placing your mouse over it and with right click. now double click the INERT / UPDATE Step

and set target Database Connection by clicking the New Button for the connection and Edit if you have it already.

For the first grid Click the Get Fields Button this will fill grid with all values you have queried in the previous Table Input Step here in this grid keep only those fields on the basis of which you will insert record.

Loading XML Data Using Pentaho ETL Tool

at

6:47 AM

Posted by

M.Junaid Khan

at

6:47 AM

Posted by

M.Junaid Khan

0

comments

0

comments

Go to the design mode, drill down the input folder and drag & drop the XML Input Step.

Below this Grid there is a Show File(s) options that allow you to view your complete files addresses.

Nnow go to the next tab of Fields followed by Content tab.

To view all these contents of your XML file you can use the XML OUTPUT Step available under the Out put folder.

SQL Query For Monthly Calculation

at

6:29 AM

Posted by

M.Junaid Khan

at

6:29 AM

Posted by

M.Junaid Khan

0

comments

0

comments

Saturday, May 15, 2010

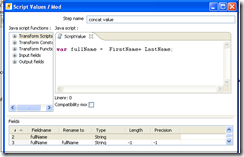

Concatenation in Pentaho Kettle

at

11:19 PM

Posted by

M.Junaid Khan

at

11:19 PM

Posted by

M.Junaid Khan

0

comments

0

comments

How to Split a String in pentaho Kettle

at

10:54 PM

Posted by

M.Junaid Khan

at

10:54 PM

Posted by

M.Junaid Khan

0

comments

0

comments

var ID = employee [0];

var Name= employee [1];

var Address = employee [2];

Thursday, May 13, 2010

Date to String Conversion in Pentaho Kettle

at

9:04 AM

Posted by

M.Junaid Khan

at

9:04 AM

Posted by

M.Junaid Khan

0

comments

0

comments

How to get Distinct Count in Pentaho

at

8:35 AM

Posted by

M.Junaid Khan

at

8:35 AM

Posted by

M.Junaid Khan

0

comments

0

comments

How to Write ETL Script in Pentaho

at

8:12 AM

Posted by

M.Junaid Khan

at

8:12 AM

Posted by

M.Junaid Khan

0

comments

0

comments

name the connection, if working on the local system name local host else IP

name the connection, if working on the local system name local host else IPWednesday, May 12, 2010

Check Table Column Existence in Pentaho

at

8:52 AM

Posted by

M.Junaid Khan

at

8:52 AM

Posted by

M.Junaid Khan

0

comments

0

comments

- Drag & Drop the above icon.

- Double click the step and name it , defined the connection for MYSQL or SQL SERVER etc.

- Table Name: The name of the column to whom the column belongs.

- Table name in Field: Enabled it if you want to read the name of the table from the input field.

- Tablename Field:Set fields here that have parameters and its type

- Columnname Field:Set the name of the column field reading from the input field.

- Result fieldname:Resulting Boolean flag that shows weather it exist or not.

Performing Calculations in Pentaho Spoon

at

8:21 AM

Posted by

M.Junaid Khan

at

8:21 AM

Posted by

M.Junaid Khan

0

comments

0

comments

- Go to Transformation in the design mode

- Drill Down the Transformation folder and drag & drop the Calculator

-

- Double Click the Calculator Step a pop window same as below will appear

- In the New Field column name your new Field

- Select the type of calculation from the list box on the fields you want to perform calculations.

- Field A, Field B and Field C combos are available to select your fields that are need to be performed calculations

- Select the value type from the combo box and set decimal precision.

Aggregate Functions in Pentaho Spoon

at

7:57 AM

Posted by

M.Junaid Khan

at

7:57 AM

Posted by

M.Junaid Khan

0

comments

0

comments

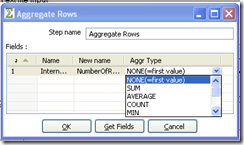

-

Drill Down the Input in the design mode and select Text Input File -

-

Double Click the Text File Input in the File tab and brows your file add it to the selected path -

In the Content tab select File Type -

Go to Fields tab and click the Get Fields , it will extract all the fields along with data their data types, reading each coma separated field -

Go to the design mode again find Deprecated the 3rd last folder drill down it and drag & drop the Aggregate Rows Step as the same icon mentioned below. -

-

Double click the Aggregate Rows a pop window will open click Get Fields it will extract all the fields as it has read from the Text Input File, set the name for each field in the New Name column and finally select the aggregate function from the combo box.

What is Checksum in Pentaho Spoon

at

7:23 AM

Posted by

M.Junaid Khan

at

7:23 AM

Posted by

M.Junaid Khan

0

comments

0

comments

- Go to Design

- Drill Down Transformation

-

Drag & Drop Add a Checksum -

-

Name the Step -

Define the Checksum Algorithm most used Algorithm for Checksum is MD-5 -

Click the Get Fields Button, this will extract and show all the fields coming from the previous step at which your going to apply checksum might be extracting using Input Step from the database table or Stored Procedure

Tuesday, May 11, 2010

Hex to integer Conversion in Kettle

at

12:10 PM

Posted by

M.Junaid Khan

at

12:10 PM

Posted by

M.Junaid Khan

0

comments

0

comments

Drill Down the Scripting step.

Drag and drop the Modified Java Script Value.

var nummerke = Packages.java.lang.Integer.valueOf(Hex.getString(), 16).intValue();

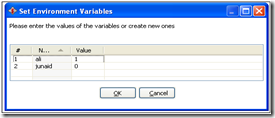

How to Set Environment Variables in Kettle

at

9:05 AM

Posted by

M.Junaid Khan

at

9:05 AM

Posted by

M.Junaid Khan

0

comments

0

comments

Monday, May 10, 2010

What is Get Variable in Pentaho Kettle

at

12:16 PM

Posted by

M.Junaid Khan

at

12:16 PM

Posted by

M.Junaid Khan

0

comments

0

comments

- Go to the Job Folder drag & drop a Get Variable Step

- Nam the value of accessing variable that you can use in other steps

- in the second column give the same name of the required variable as you have declared it in Set Variable Step in below format

- ${variable} or %%variable%%

What is Set Variable in Pentaho Kettle

at

11:56 AM

Posted by

M.Junaid Khan

at

11:56 AM

Posted by

M.Junaid Khan

0

comments

0

comments

Sunday, May 9, 2010

Storing Transformations and Jobs in local Files System

at

5:48 AM

Posted by

M.Junaid Khan

at

5:48 AM

Posted by

M.Junaid Khan

0

comments

0

comments

What is Pentaho

at

5:33 AM

Posted by

M.Junaid Khan

at

5:33 AM

Posted by

M.Junaid Khan

0

comments

0

comments

sources.Kitchen is a program that can execute jobs designed by Spoon in XML or in a database repository. Usually

Transformations and Jobs can describe themselves using an XML file or can be put in a Kettle database

repository. This information can then be read by Pan or Kitchen to execute the described steps in the

transformation or run the job.

ETL Scripting with Open Source ETL Tools

at

2:55 AM

Posted by

M.Junaid Khan

at

2:55 AM

Posted by

M.Junaid Khan

2

comments

2

comments

- Functional layer: Core functional ETL processing (extract, transform, and load).

- Operational management layer: Job-stream definition and management, parameters, scheduling, monitoring, communication and alerting.

- Audit, balance and control (ABC) layer: Job-execution statistics, balancing and controls, rejects- and error-handling, codes management.

- Utility layer: Common components supporting all other layers.

- Apatar

- CloverETL

- Flat File Checker

- Jitterbit 2.0

- Pentaho Data Integration (now[update] included in OpenOffice Base)

- RapidMiner

- Scriptella

- Talend Open Studio

Saturday, May 8, 2010

SELECT AS CLAUSE

at

10:58 PM

Posted by

M.Junaid Khan

at

10:58 PM

Posted by

M.Junaid Khan

0

comments

0

comments

In SQL Query you can define column heading jus write heading near to field name with out any space but if you want to make space in column heading then use AS clause like wise

Column Heading with out AS Clause

SELECT LASTNAME, SALARY TotalSalary

FROM EMP

WHERE WORKDEPT = 'C01'

ORDER BY 2 DESC

Column Heading with space

SELECT LASTNAME, SALARY + BONUS + COMM AS TOTAL EANINGS

FROM EMP

WHERE WORKDEPT = 'C01'

ORDER BY TOTAL_EANINGS DESC

Calculations in SQL Query

at

10:47 PM

Posted by

M.Junaid Khan

at

10:47 PM

Posted by

M.Junaid Khan

0

comments

0

comments

SELECT LASTNAME, SALARY + BONUS + COMM

FROM EMP

WHERE JOB = 'FIELDREP'

Which operators have total earnings less then $20,000?

SELECT LASTNAME, SALARY + BONUS + COMM

FROM EMP

WHERE JOB = 'OPERATOR'

AND SALARY + BONUS + COMM < 20000

SQL Distinct Unique Records

at

10:31 PM

Posted by

M.Junaid Khan

at

10:31 PM

Posted by

M.Junaid Khan

0

comments

0

comments

The Below Query will select all rows this will also include repetative or redundent rows i..e un necessary or duplicated rows i.e. UNIQUE Records

SELECT WORKDEPT

FROM EMP

Distinct rows:

This is will extract all the rows but will remove the duplicated rows

1.SELECT DISTINCT WORKDEPT

FROM EMP

2.SELECT DISTINCT WORKDEPT, JOB

FROM EMP

Distinct Count

One person can send many feedack / comments against this post so count the unique no of person who posted their comments

SELECT POSTED_BY,COUNT(DISTINCT COMMENTS)

FROM FEEDBACK

GROUP BY POSTED_BY

SQL IN, NOT IN and NOT BETWEEN Clauses

at

10:25 PM

Posted by

M.Junaid Khan

at

10:25 PM

Posted by

M.Junaid Khan

0

comments

0

comments

1. SELECT EMPNO, LASTNAME, SALARY

FROM EMP

WHERE WORKDEPT IN ('D11', 'D21', 'E11')

2.SELECT *

FRROM EMPLOYEE

WHERE COUNTRY IN ('PAKISTAN','UK','US','AUTRALIA' )

NOT INTo select employees that has not been hired in the month of MAY 2010

SELECT *

FROM EMPLOYEE

WHERE HIRE_DATE NOT BETWENN '2010-05-01 00:00:00' AND '2010-05-31 23:59:59'

2.SELECT *

FRROM EMPLOYEE

WHERE COUNTRY NOT IN ('PAKISTAN','UK','US','AUTRALIA' )

NOT BETWEEN

SQL Between Clause

at

10:08 PM

Posted by

M.Junaid Khan

at

10:08 PM

Posted by

M.Junaid Khan

0

comments

0

comments

Example

Select c.customer_name,i.invoice_no,i.invoice_Date

from sales s

inner join customer c on s.customer_id=c.customer_id

where s.sales_date between '2010-05-01 00:00:00' and '2010-05-31 23:59:59'

SQL Multiple Conditional Queries

at

10:03 PM

Posted by

M.Junaid Khan

at

10:03 PM

Posted by

M.Junaid Khan

0

comments

0

comments

Exampes

1.SELECT LASTNAME, SALARY

FROM EMP

WHERE SALARY > 22000

AND JOB = 'DESIGNER'

AND SEX = 'F'

Who makes more than $40,000 or is a manager?

2.SELECT LASTNAME, FIRSTNAME, SALARY, JOB

FROM EMP

WHERE SALARY > 40000 OR

JOB = 'MANAGER'

3.Which Filed Rep makes more than $2,000 in commissions or who has an education lever higher then 18?

SELECT LASTNAME, JOB, COMM

FROM EMP

WHERE (COMM > 2000 AND JOB = 'FIELDREP')

OR EDLEVEL > 16

4.SELECT LASTNAME, JOB, COMM

FROM EMP

WHERE COMM > 2000 AND (JOB = 'FIELDREP'

OR EDLEVEL > 16)

SQL NULL Conditional Queries

at

9:58 PM

Posted by

M.Junaid Khan

at

9:58 PM

Posted by

M.Junaid Khan

0

comments

0

comments

Examples:

1. SELECT DEPTNO, DEPTNAME, MGRNO

FROM DEPT

WHERE MGRNO IS NULL

SQL Server

Select top ten emplyees who do not have any manager in SQLSERVER

select top ten emplyees who do not have any manager in mysql2. SELECT TOP 10 *

FRO M EMPLOYEE

WHERE MANGAER IS NOT NULL

MYSQL

Select top ten emplyees who do not have any manager in mysql

2. SELECT *

FROM EMPLOYEE

WHERE MANGAER IS NOT NULL

limit 10

SQL Comparison Operators

at

9:45 PM

Posted by

M.Junaid Khan

at

9:45 PM

Posted by

M.Junaid Khan

0

comments

0

comments

<> Not Equal

> Greater than

>= Greater than or equal

< Less than

<= Greater than or equal

Examples:

SELECT EMPNO, LASTNAME, SALARY

FROM EMP

WHERE SALARY >= 20000

SELECT LASTNAME, FIRSTNAME, JOB

FROM EMP

WHERE JOB <> 'DESIGNER'

Saturday, May 1, 2010

Candidate Key

at

2:06 AM

Posted by

M.Junaid Khan

at

2:06 AM

Posted by

M.Junaid Khan

0

comments

0

comments

1. the relation does not have two distinct tuples with the same values for these attributes (which means that the set of attributes is a superkey)

2.there is no proper subset of these attributes for which

(1) holds (which means that the set is minimal).

Since a relation contains no duplicate tuples, the set of all its attributes is a superkey if NULL values are not used. It follows that every relation will have at least one candidate key.

The candidate keys of a relation tell us all the possible ways we can identify its tuples. As such they are an important concept for the design database schema.

Super Key

at

2:03 AM

Posted by

M.Junaid Khan

at

2:03 AM

Posted by

M.Junaid Khan

1 comments

1 comments

Composite & Compound keys

at

1:59 AM

Posted by

M.Junaid Khan

at

1:59 AM

Posted by

M.Junaid Khan

0

comments

0

comments

Foreign key

at

1:50 AM

Posted by

M.Junaid Khan

at

1:50 AM

Posted by

M.Junaid Khan

0

comments

0

comments

Alternate or Secondary Key

at

1:40 AM

Posted by

M.Junaid Khan

at

1:40 AM

Posted by

M.Junaid Khan

0

comments

0

comments

Surrogate Key

at

1:36 AM

Posted by

M.Junaid Khan

at

1:36 AM

Posted by

M.Junaid Khan

0

comments

0

comments

This definition is based on that given by Hall, Owlett and Todd (1976). Here a surrogate represents an entity in the outside world. The surrogate is internally generated by the system but is nevertheless visible to the user or application.

Surrogate (2)

This definition is based on that given by Wieringa and De Jonge (1991). Here a surrogate represents an object in the database itself. The surrogate is internally generated by the system and is invisible to the user or application.